Behind the Mask of Madgicx Plus: A Chrome Extension Campaign Targeting Meta Advertisers

In this Threat Analysis Report, Cybereason analyzes an investigation into a new malicious Chrome extension campaign

Cybereason Security Services Team

Many people have the experience of buying a product for personal use, that has all the capability and more that you could wish for. When you actually start using it you discover that you don’t have the expertise or perhaps time, to really get the best out of it, or that the way the product is designed makes it an over complex set of tasks to get to the result you are needing - TV/video recorders are classic examples.

The same is true in business and these days, we focus too often on capability. Whilst it has left us with a very human-centric approach, if we are to scale, we need to look at the operational overheads and the usability of our cybersecurity tools.

Many years ago, I was involved with a government organization that evaluated early sandboxing technologies. They made the decision to go ahead with their own testing to see how well each solution detected unknown attacks. The results were fairly comparable in terms of detections and so the organization deemed all the solutions pretty equal. For me, the results were surprising, so I dug deeper into the methodology to see if it was really the case.

What I found was, whilst they had tested the capability of detection, they hadn’t thought about the time and motions to achieve the results. Plus, there had been a whole team working on the testing, but in reality, this would only be a small part of one person's role. In addition, aspects such as true positives to false positives weren’t considered - which can significantly change the time to detect if you’re fighting through unwanted noise.

As such, whilst the different technologies all achieved the desired goal of detecting unknown attacks, the effort involved varied greatly.

Whilst you can always look at either your own or a third party's testing of cybersecurity capabilities, the outcomes and detections found will be the same. Yet, in every organization, the skills levels and capacities of them vary.

When looking at any technology, businesses must assess their own technical capabilities. The key to this is that any testing should reflect real world usage and staff.

At a similar point in my career we acquired Mandiant, which at that time was a consulting business focused on Incident Response (IR) which had some nascent EDR endpoint technology. The EDR product was the collaboration of the bespoke IR and forensics the team had built out in the field. We quickly realized that the technical knowledge required to use many of the capabilities was beyond the majority of analysts and responders skills. In fact, we were drowning them in very technically-rich, detailed evidence.

In order to remedy this, we built a lightweight version of the product which dropped the degree of information gathered by 20-fold, monitoring only the most common points of intrusion onto an endpoint. For some, this was a workable version, but for others, despite reducing the volume of security data, it was still too technical to quickly and easily comprehend and act on. In order to alleviate this, we decided to launch an outcome-based managed service around the capability.

You may decide for one of many reasons that it's not financially viable for your company to hire the staff required to gain the value you want from such a solution. So instead, you choose to take on an outcome-based service. Either way, there needs to be a skills and technology match.

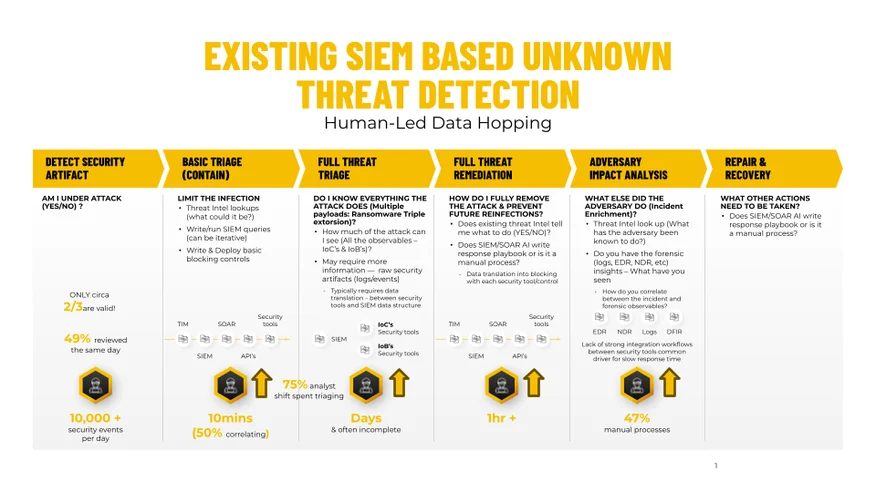

Why all the trips down memory lane you may ask? Well, the technology world continues to evolve, especially in the cybersecurity space. EDR has evolved into XDR, which in turn has evolved into SDR. At each of these levels, the volume of technical knowledge grows and with it, the skills required to gain the desired value from them. At the same time, if you ask most organizations, their most common metrics of success are the Mean Time To Detect/Triage/Respond.

We don't look at enough of the right metrics. So what other metrics should businesses be considering when they evaluate the visibility fit of a cybersecurity technology for the business?

Working with the SOC team in my last company, we focused on the HOW (the journey), not just the WHAT (the outcome). For example, we knew the baselines at the key stages of our incident handling and we would continually explore the influencing factors that were against these.

Detection time: What typically impacts detection time is the total number of alerts being generated. Are these being aggregated in a smart way? What percentage of those alerts are false positives? And how much tuning is required on setup to keep alerts within acceptable levels going forward? What percentage of threats can you detect and block without human input? What's the percentage of alerts that you think are malicious but require human verification?

Triage time: The majority of a SOC analyst’s time is spent in the space between basic triage and containment, to the full triage that's required for remediation and recovery. At the simplest level, the question to ask is; how much evidence do I need to have in order to confidently understand the attack and see what the adversary might do? Whilst this sounds simple, we have seen the number of observables (the indicators of compromise and behaviors - IoC’s & IoB’s) steadily growing. This means, to see the attack with confidence, to know if it actually worked in your environment and what the adversary behind it has done, typically involves a lot of console hopping.

There are still ongoing questions around the process, such as:

Response time: Almost the hardest as what's encapsulated in the response and recovery varies. However, most typically, the first question is, do you actually have the forensic evidence, or was it deleted days or weeks ago due to costs? If you have the data, how easy is it to retrieve? Do you have the skills to understand it, or are you back to my second observation, where in such instances, you decide to move to an outcome-based service? The challenge here becomes whether your organization wants to install their own forensics tools after the fact. The other aspect of response is ensuring the threat doesn’t return successfully in the future. Do you have the skills and visibility to ensure this? If you haven’t seen all of the malicious operations, how do you know if you have put in mitigations against all the required aspects?

Some CISOs I know work on a premise that for every one new technology deployed, two should be removed. I wonder if we tried to apply a similar principle to the operational aspects of cybersecurity, how far we could progress. For every new addition, it must cut how long it takes to complete that specific task in half. It’s a principle that is much tougher to achieve, especially as we keep adding more layers of complexity to cybersecurity.

Take some of the key steps (outlined below) and think about what the key journey metrics should be to get you on the road to improving operational efficiency. I suspect as you go through the process, most of you would start to consider what needs to be done to do this manually. Can it be automated or should I simply outsource and take a managed solution approach?

|

Key Steps |

Time (outcome) |

Accuracy & Usability (journey) |

|

|

|

|

||

|

|

|

|

||

|

|

|

In this Threat Analysis Report, Cybereason analyzes an investigation into a new malicious Chrome extension campaign

.png)

In this Threat Analysis Report, investigates the flow of a Tangerine Turkey campaign

In this Threat Analysis Report, Cybereason analyzes an investigation into a new malicious Chrome extension campaign

.png)

In this Threat Analysis Report, investigates the flow of a Tangerine Turkey campaign

Get the latest research, expert insights, and security industry news.

Subscribe